History of Public Health Documentation: From Paper to Electronic Health Records

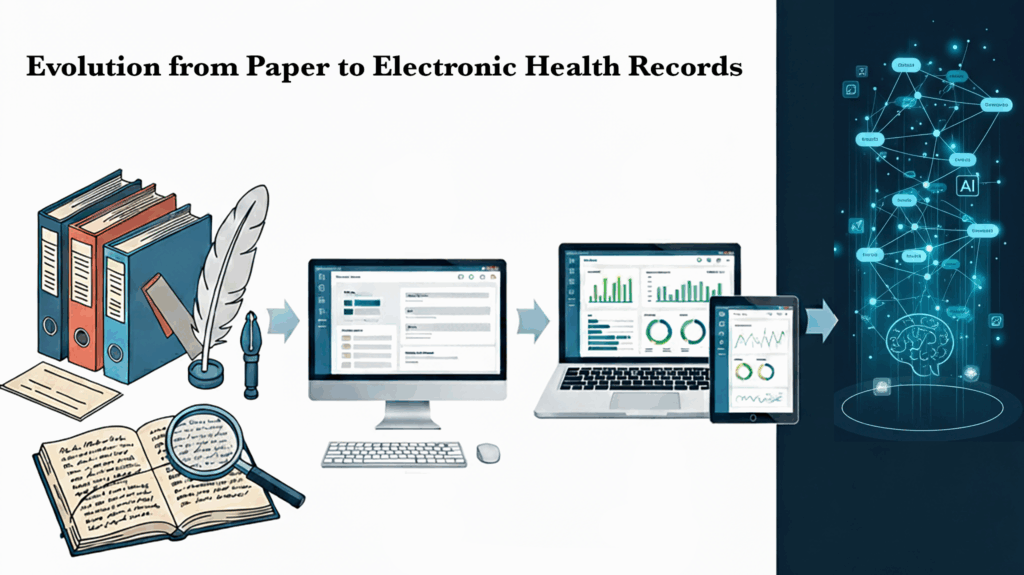

Public health records have evolved over the decades and understanding the history of this transition may help us understand better why the systems we work with are as they are today and what we might look forward to in the future.

Early Paper-Based Documentation

Public health documentation began in the 19th century with handwritten ledgers and mortality tables used to monitor infectious diseases like cholera, smallpox, and tuberculosis.

Florence Nightingale was a pioneer in the 1850s in using data to advocate for public health reform. Her key methods inform how public health data has been recorded ever since.

- Systematic Records – Florence Nightingale systematically collected and analyzed data to provide evidence for her arguments. She advocated for standardized forms to ensure consistent data collection.

- Statistical Analysis – She was trained as a statistician and used her mathematical skills to analyze data she collected. Her analysis revealed that the majority of soldier deaths were due to preventable diseases caused by poor sanitation.

- Data Visualization – To communicate her findings, she created a chart called a Nightingale Rose or “coxcomb” diagram that was a type of circular histogram. It made complex data accessible and she used it to show death rates before and after sanitation reform, illustrating that improving sanitation was the most effective way to save lives.

By the early 20th century, standardized paper forms—such as birth and death certificates and immunization records—became common, laying the groundwork for systematic public health surveillance.

Computerized Systems and Early Digitalization

The shift from paper to digital records began in the 1960s and 1970s, as computer technology advanced. These decades witnessed the development of early electronic health record (EHR) systems in academic medical centers and government initiatives [Magazine Science, Wikipedia 1]. The concept of the EHR itself traces from this time period[ScienceDirect].

Standalone Applications & System Integration (1980s–1990s)

Throughout the 1980s and 1990s, personal computers and relational databases enabled the creation of public health information systems—such as early immunization registries and electronic vital records—that were capable of faster data sharing among local health agencies [Tebra].

The American Nurses Association recognized the Omaha System as a standardized terminology to support nursing practice in 1992.

The EHR Era and Broader Adoption

By the late 20th and early 21st centuries, EHR systems gained wider adoption in both clinical and public health contexts. Unlike hospital EHRs—which focused on individual clinical care—public health systems used EHR technologies for surveillance, immunization tracking, and population health monitoring.

U.S. Policy Catalyst: The HITECH Act

A pivotal milestone came with the HITECH Act of 2009, which allocated roughly $27 billion to incentivize EHR adoption and meaningful use of interoperable systems [Magazine Science, Stanford Medicine]. This act provided a foundation for integrating clinical and public health record keeping.

Standards and Interoperability

Standardization played a critical role in enabling systems to communicate effectively. The Fast Healthcare Interoperability Resources (FHIR) standard, developed by HL7, provided a flexible and modern framework for exchanging health data across systems—facilitating public health surveillance and interoperability [Wikipedia 2].

Nightingale Notes from Champ Software has the standardized Omaha System taxonomy integrated into its charting. This taxonomy, recognized by the American Nurses Association, promotes consistent, high-quality clinical documentation and communication among public health professionals for improved patient outcomes and a better overall healthcare experience.

Current and Emerging Trends

Today’s public health documentation increasingly relies on systems like electronic case reporting (eCR) and Health Information Exchanges (HIEs), which support real-time data sharing and epidemic tracking [Reference]. Innovative platforms like the MIMIC‑III database have enabled powerful research capabilities and data-driven public health insights [arXiv].

Key concepts from the mid-1800s to today, still hold true. Having standardized data, doing in-depth analysis of it and providing it to decision-makers in accessible ways remain important to public health improvement. The tools available to us are faster, more interconnected, and more accessible than ever. The implications of that for public health and privacy are still being decided.

Decade-by-Decade Timeline

1800s – Local health officials record disease outbreaks in handwritten ledgers; mortality statistics compiled manually.

1900s–1930s – Standardized paper forms (e.g., birth and death certificates) introduced for vital records and immunization tracking.

1940s–1950s – Early tabulating machines and punch-card systems used to process epidemiological data.

1960s–1970s – Mainframe computers adopted by health departments; concept of the electronic health record emerges in academic and research contexts.

1980s – Growth of relational databases enables more advanced registries; early immunization and cancer registries established. [Reference]

1990s – State-level electronic systems expand; wider use of networked databases for vital statistics and disease surveillance.

2000s – Public health agencies adopt digital reporting tools; EHR adoption accelerates in clinical care; interoperability becomes a policy priority.

2009 – The U.S. HITECH Act provides federal funding and incentives for meaningful use of EHRs, including public health reporting. [Wikipedia 1]

2010s – Emphasis on interoperability standards like HL7 FHIR; Health Information Exchanges (HIEs) and electronic case reporting gain momentum. [Wikipedia 2]

2020s – Integration of real-time analytics, big data, and AI for public health surveillance; global expansion of digital health systems to strengthen pandemic preparedness.

References

- “Electronic Health Records: Towards Digital Twins in Healthcare“, arXiv, Feb, 2025.

- “History of EHRs in healthcare technology“, The intake, 12 Nov 2024

- Omaha System Overview, https://www.omahasystem.org/overview

- “The Evolution of Electronic Health Records: From Paper to Digital Systems“, Reference, 20 May 2025.

- “The Evolution of Electronic Health Records: From Paper to Interoperable Systems“, Magazine Science, 12 Apr 2025.

- “Twenty-Five Years of Evolution and Hurdles in Electronic Health Records and Interoperability in Medical Research: Comprehensive Review“, ScienceDirect, Volume 27, 13 Jan 2025.

- “White Paper: The Future of Electronic Health Records“, Stanford Medicine, Sept 2018.

- Wikipedia 1 “Health Information Technology for Economic and Clinical Health Act“, Wikipedia.org, Accessed 01 Sep, 2025.

- Wikipedia 2 “Fast Healthcare Interoperability Resources“, Wikipedia.org, Accessed 01 Sep, 2025.